关于豆瓣Top250书籍读书笔记的爬取,涉及到的内容有:使用requests进行爬取、代理的设置、“生产者和消费者”(多线程加速)。

先确定好一个整体的思路,豆瓣Top250是一个静态网页,所以直接采取requests进行爬取,我们需要做的是第一:网页的翻页处理(静态网址十分容易构造出规律),第二:对每一页中的书本详情页进行爬取,第三:之后本来我试过想爬它的评论,但是有爬取限制,查阅后,发现只有它的笔记是可以全部爬取的,所以这里又有一个笔记的网页跳转和循环。思路很简单,翻页、获取id、笔记页的爬取,再套入到生产者和消费者模型,可以实现快速的爬取。

分解代码块如下,首先是生产者:

```python

class Producer(threading.Thread):

def __init__(self,page_queue,detail_queue):#两个参数分别为列表队列和详情页对列

super(Producer,self).__init__()

self.page_queue = page_queue

self.detail_queue = detail_queue

def run(self):#线程里的run用法

while True:

if self.page_queue.empty():

break

url = self.page_queue.get()

self.pares_url(url)

time.sleep(3)

def pares_url(self,url):

response = session.get(url,headers=headers)

doc = pq(response.text)#解析列表页

for i in doc(".pl2 a").items():

pinl = urljoin(i.attr("href"), pin)

self.detail_queue.put(pinl)#获得书本详情页的编号,并加入到队列中

```

接下来是消费者代码:

```python

class Consumer(threading.Thread):

def __init__(self,page_queue,detail_queue):

super(Consumer,self).__init__()

self.page_queue = page_queue

self.detail_queue = detail_queue

def run(self):

global ls

while True:

if self.detail_queue.empty() and self.page_queue.empty():

break

detail_url = self.detail_queue.get()

if detail_url == None:

continue

ls.append(detail_url)

for i in range(20, 30):

k = ls[i]#遍历列表中的书籍详情页

fo = open("你的存储地址{p}.txt".format(p=i), "w+", encoding="utf-8")#将每篇书籍的评论分别保存为txt文件

for m in range(25):

response = requests.get(k.format(page=m * 10), headers=headers)#分析它的链接规律,可以发现它的评论数是页码*10递增的

doc = pq(response.text)

for i in doc("div.short > span").items():

print(i.text())

fo.write(i.text() + "\n")#找到评论节点并写入到txt中。

```

然后将调用main函数:

```python

def main():

page_queue = Queue(100)

detail_queue = Queue(100)#设置队列的数量

for i in range(1,3):

url = f"https://book.douban.com/top250?start={i*25}"#要爬取的列表页

page_queue.put(url)

for i in range(2):#生产者数量

t = Producer(page_queue,detail_queue)

t.start()

time.sleep(1)

for i in range(2):#消费者数量

t = Consumer(page_queue,detail_queue)

t.start()

time.sleep(2)

```

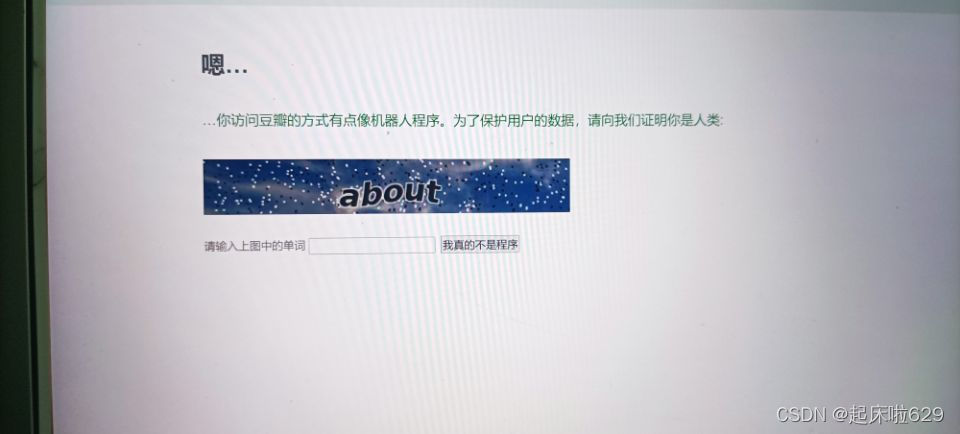

注意生产者和消费者的数量不要过多,避免对服务器造成压力,在爬取的过程中,多次爬取豆瓣会识别出来,如图所示:

所以控制好抓取频率,或者购买静态代理是比较可取的做法。

最后给出完整代码,如下:

```python

import threading

import time

import requests

from queue import Queue

from pyquery import PyQuery as pq

pinlun = "annotation?sort=rank&start={page}"

from urllib.parse import urljoin

session = requests.Session()

start = time.time()

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36 Edg/94.0.992.38",

"Cookie": 'bid=9wav1Wro38U; ll="118212"; douban-fav-remind=1; gr_user_id=afac7f01-77c9-4e45-8b0c-5507d55a6ba2; _vwo_uuid_v2=DD8720E14FB6DAFA718D6ED15DAC45A01|444bff77e8a91775eec72192a78484e9; __yadk_uid=3nY6qPDW7U1rl7Mw6NtoqI9e61p5lBtZ; _ga=GA1.2.42061330.1632664255; viewed="1007305_5958397_1427374_3040149_2995812_1255624"; ap_v=0,6.0; __utma=30149280.42061330.1632664255.1633926859.1633960199.16; __utmz=30149280.1633960199.16.14.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; __utmc=30149280; __utma=81379588.477759952.1633224891.1633926859.1633960199.7; __utmc=81379588; __utmz=81379588.1633960199.7.5.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; _pk_ref.100001.3ac3=%5B%22%22%2C%22%22%2C1633960199%2C%22https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3DTLjsUHG06ralgyPcXRkhSh_rJfhYpSO41VRbdKUnzzeFc6g1D7KsrUGPfVrHmZPg%26wd%3D%26eqid%3D8d808366000980330000000561644101%22%5D; _pk_ses.100001.3ac3=*; gr_cs1_b1e59be5-217d-43fe-9dcf-b15c8dfa454c=user_id%3A0; __utmt_douban=1; __utmt=1; __utmt=1; dbcl2="248263534:+p1OUXqNmwM"; ck=rCrH; push_noty_num=0; push_doumail_num=0; __utmv=30149280.24826; __gads=ID=19cffab0026932ef-22adb7fdebcb002f:T=1632664256:S=ALNI_MbTm_GsPA6YzhpN_0VLc3c7Yy1umw; gr_session_id_22c937bbd8ebd703f2d8e9445f7dfd03=3af4e12c-28c9-4652-bc23-8dfa6c3ea1ff; gr_cs1_3af4e12c-28c9-4652-bc23-8dfa6c3ea1ff=user_id%3A1; gr_session_id_22c937bbd8ebd703f2d8e9445f7dfd03_3af4e12c-28c9-4652-bc23-8dfa6c3ea1ff=true; __utmb=30149280.52.10.1633960199; __utmb=81379588.25.10.1633960199; _pk_id.100001.3ac3=fb7827c4af37c8e5.1633224891.7.1633962055.1633927473.'}

class Producer(threading.Thread):

def __init__(self,page_queue,detail_queue):

super(Producer,self).__init__()

self.page_queue = page_queue

self.detail_queue = detail_queue

def run(self):

while True:

if self.page_queue.empty():

break

url = self.page_queue.get()

self.pares_url(url)

time.sleep(3)

def pares_url(self,url):

response = session.get(url,headers=headers)

doc = pq(response.text)

for i in doc(".pl2 a").items():

pinl = urljoin(i.attr("href"), pin)

self.detail_queue.put(pinl)

ls = []

class Consumer(threading.Thread):

def __init__(self,page_queue,detail_queue):

super(Consumer,self).__init__()

self.page_queue = page_queue

self.detail_queue = detail_queue

def run(self):

global ls

while True:

if self.detail_queue.empty() and self.page_queue.empty():

break

detail_url = self.detail_queue.get()

if detail_url == None:

continue

ls.append(detail_url)

for i in range(20, 30):

k = ls[i]

fo = open("你的存储地址{p}.txt".format(p=i), "w+", encoding="utf-8")

for m in range(25):

response = requests.get(k.format(page=m * 10), headers=headers)

doc = pq(response.text)

for i in doc("div.short > span").items():

print(i.text())

fo.write(i.text() + "\n")

def main():

page_queue = Queue(100)

detail_queue = Queue(100)

for i in range(1,3):

url = f"https://book.douban.com/top250?start={i*25}"

page_queue.put(url)

for i in range(2):

t = Producer(page_queue,detail_queue)

t.start()

time.sleep(1)

for i in range(2):

t = Consumer(page_queue,detail_queue)

t.start()

time.sleep(2)

if __name__ == "__main__":

main()

```

最后完工了,欢迎各位小伙伴留言一起交流!!