GAN Lecture 1

Yann LeCun: Adversarial training is the coolest thing since sliced bread.

Outline

- Basic Idea of GAN

- GAN as Structured Learning

- Can Generator Learn by Itself?

- Can Discriminator Generate?

- A Little Bit Theory

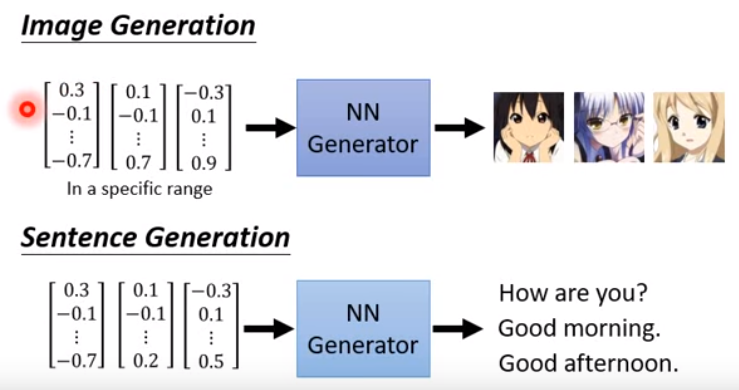

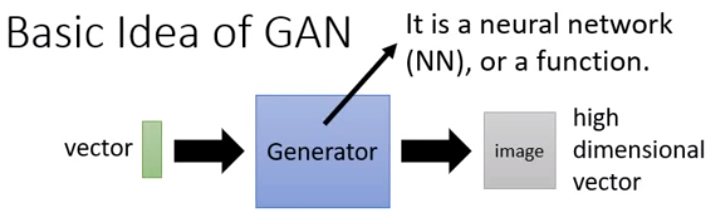

Basic Idea of GAN

Generation

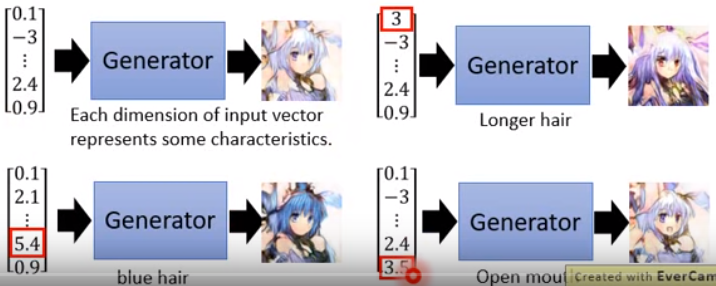

Generation: it is a neural network or a function.

input vector: each dimension of input vector represents some characterstics.

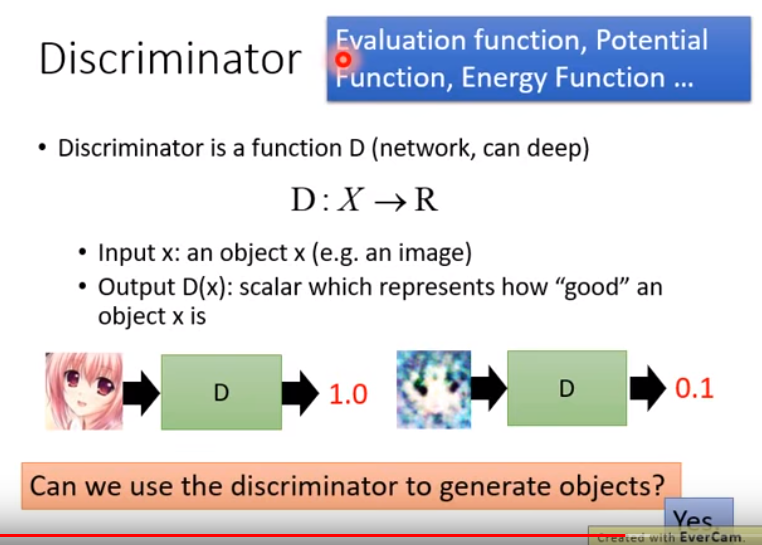

Discriminator

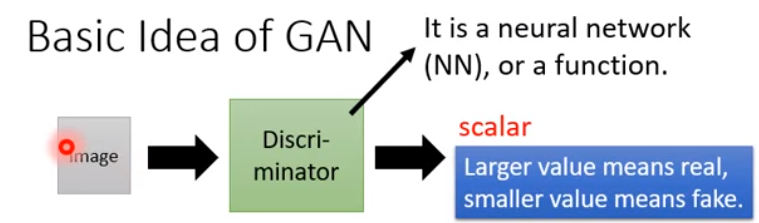

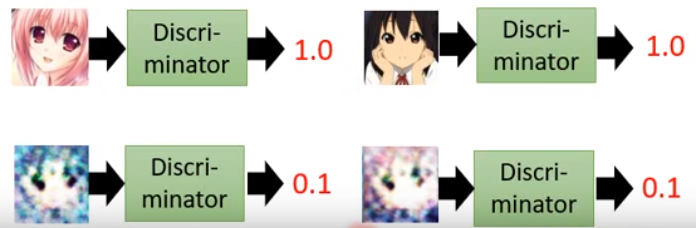

Discriminator: it is a neural network or a function.

output scalar: large value means real, smaller value means fake.

GAN Algorithm

Initialize generator and discriminator

-

In each training iteration:

Step 1: fix generator G, and update discriminator D

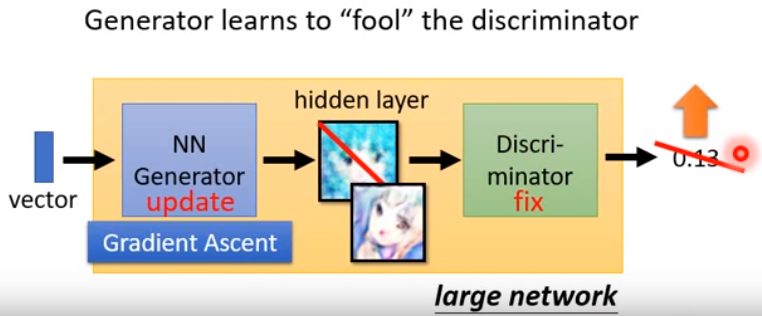

Step 2: fix discriminator D, and update generator G

Initialize for D and

for G

-

In each training iteration:

- Sample m examples

from database

- Sample m noise samples

from a distrubution

- Obtaining generated data

- Update discriminator parameters

to maximize

Learning D

- Sample m noise samples

from a distribution

- Update generator parameters

to maxmize

Learning G

- Sample m examples

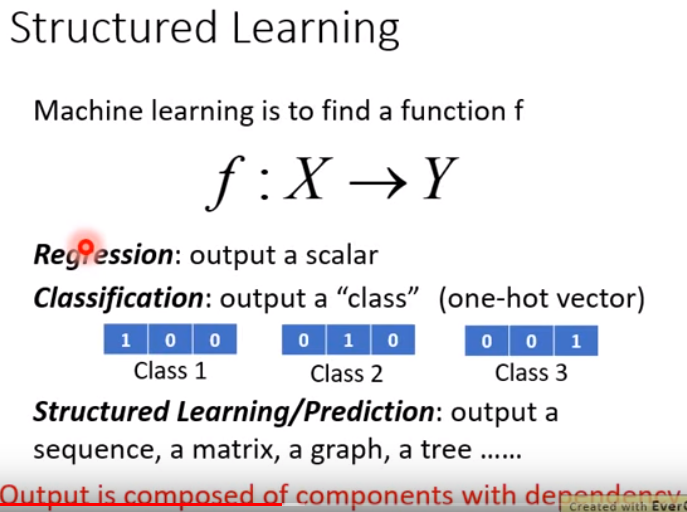

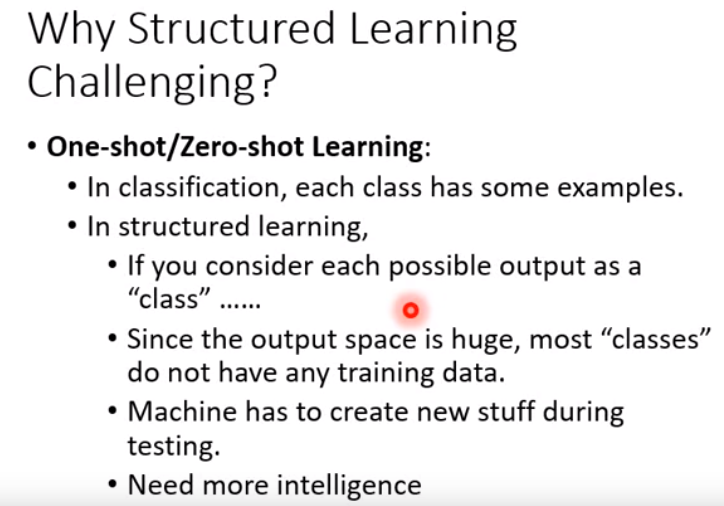

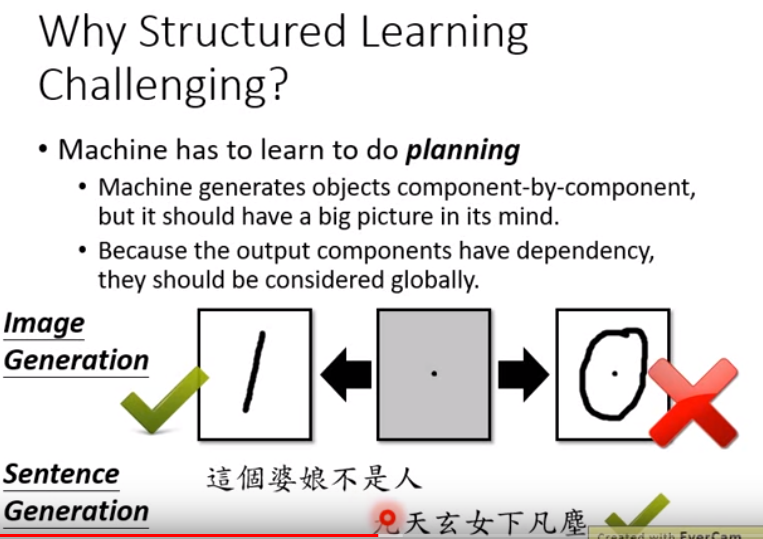

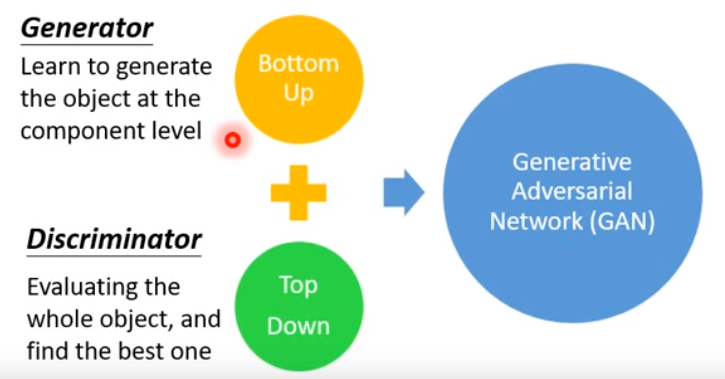

GAN as Structured Learning

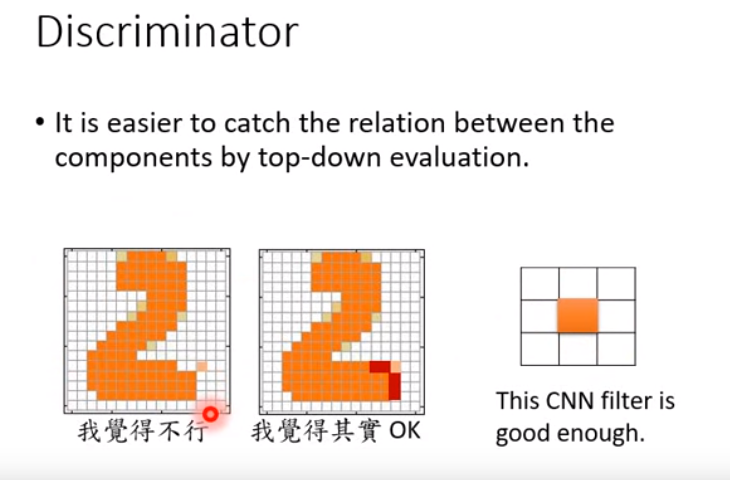

Structured Learning Approach

-

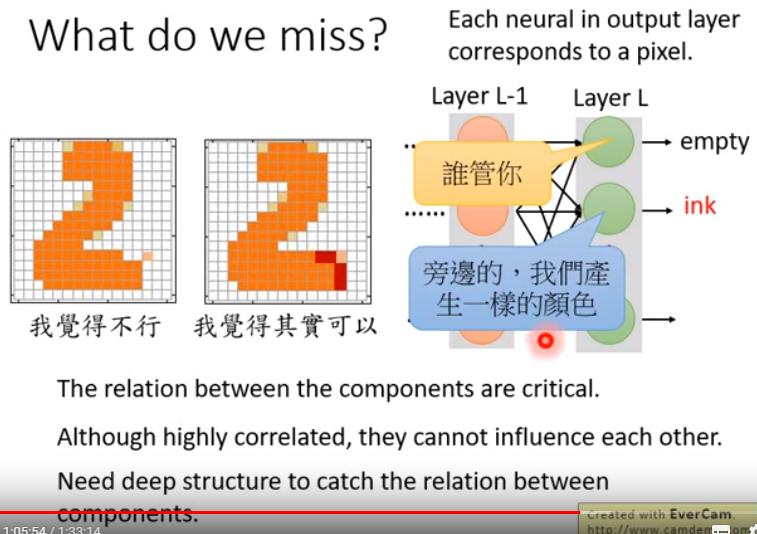

Bottom Up: Learn to generate the object at the component level

缺乏大局观

Top Down: Evaluating the whole object, and find the best one

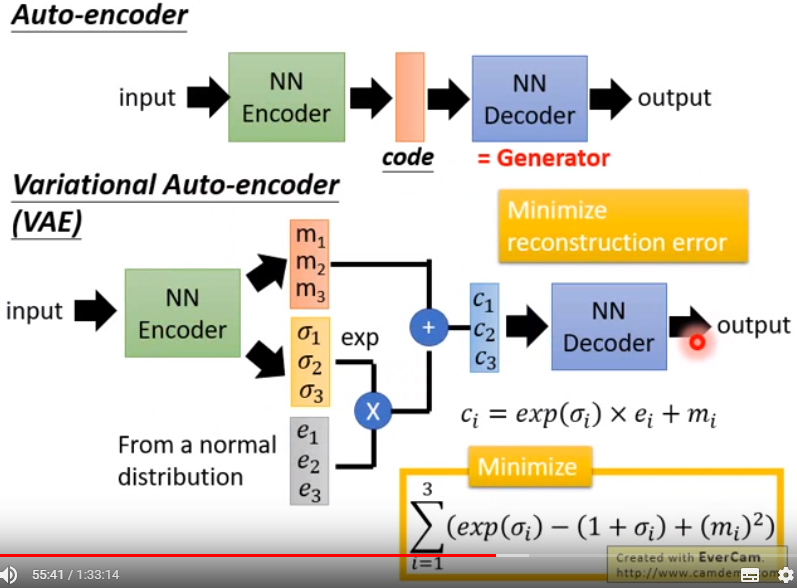

Can Generator Learn by Itself?

若使用Auto-Encoder技术作为Generator,那么需要更深的网络结构。

Can Discriminator Generate?

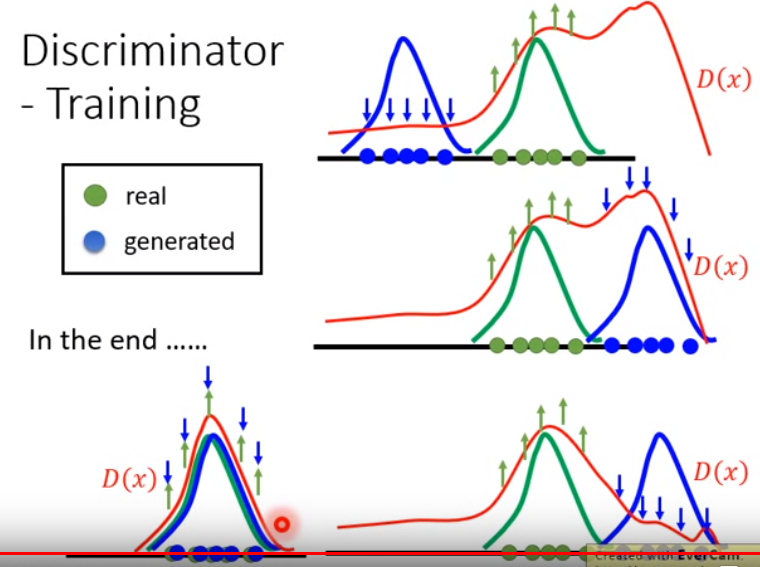

Discriminator -- Training

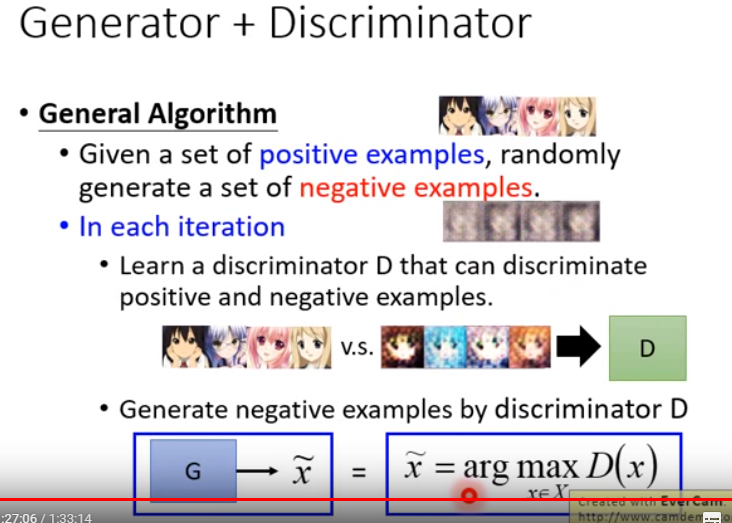

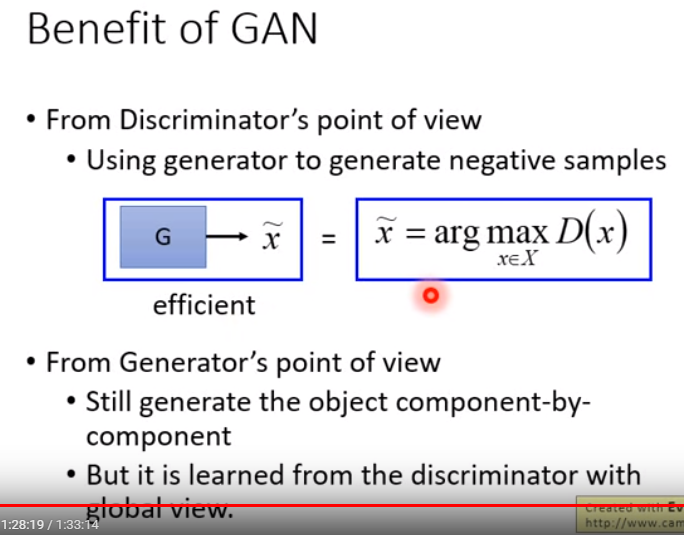

- General Algorithm

- Given a set of positive example, randomly generate a set of negative examples.

- In each iteration

- Learn a discriminator D that can discriminate positive and negative examples.

- Generate negative examples by discriminator D:

Generator v.s. Discriminator

- Generator

- Pros:

- Easy to generate even with deep model.

- Cons:

- Imitate the appearance.

- Hard to learn the correlation between components.

- Pros:

- Discriminator

- Pros:

- Considering the big picture.

- Cons:

- Generation is not always feasible, especially when your model is deep.

- How to do negative sampling?

- Pros:

Discriminator + Generator -- Training

- General Algorithm

- Given a set of positive example, randomly generate a set of negative examples.

- In each iteration

- Learn a discriminator D that can discriminate positive and negative examples.

- Generate negative examples by discriminator D: