title: iOS音频编程之混音

date: 2017-04-19

tags: Audio Unit,AUGraph, Mixer,混音

博客地址

iOS音频编程之混音

需求:多个音频源混合后输出,

项目说明:项目中采样4路音频源混合,音频源包含44100hz采样率,3000hz采样率,单声道和立体声;使用MixerVoiceHandle封装混音处理,用户只需要初始化音频文件路径数组,调用启动混音接口,就可实现多路音频混合输出

AVAudioSession设置

没有把对AVAudioSession的设置封装进MixerVoiceHandle中,用户的app可能对Session会有不同的设置(如录音),对于混音只要保证session能播放,bufferDuration和采样率为MixerVoiceHandle申请的一样即可

AVAudioSession *sessionInstance = [AVAudioSession sharedInstance];

[sessionInstance setCategory:AVAudioSessionCategoryPlayback error:&error];

handleError(error);

NSTimeInterval bufferDuration = kSessionBufDuration;

[sessionInstance setPreferredIOBufferDuration:bufferDuration error:&error];

handleError(error);

double hwSampleRate = kGraphSampleRate;

[sessionInstance setPreferredSampleRate:hwSampleRate error:&error];

handleError(error);

//接下来设置AVAudioSessionInterruptionNotification和AVAudioSessionRouteChangeNotification,省略

kSessionBufDuration为0.05s,kGraphSampleRate44100hz,

session的IOBufferDuration的意思就是在各Audio Unit的回调函数中提供0.005s时间的数据,如录音时,采集到0.005s的数据会进入一次回调函数

读取音频数据

读取音频数据到内存中,耗时比较久,放到后台线程中执行,而初始化AUGraph时,用到了读取出的音频信息,所以干脆将读取音频数据,混音设置都放在了一个后台的串行队列中。

_mSoundBufferP = (SoundBufferPtr)malloc(sizeof(SoundBuffer) * self.sourceArr.count);

for (int i = 0; i < self.sourceArr.count; i++) {

NSLog(@"read Audio file : %@",self.sourceArr[i]);

CFURLRef url = CFURLCreateWithFileSystemPath(kCFAllocatorDefault, (CFStringRef)self.sourceArr[i], kCFURLPOSIXPathStyle, false);

ExtAudioFileRef fp;

//open the audio file

CheckError(ExtAudioFileOpenURL(url, &fp), "cant open the file");

AudioStreamBasicDescription fileFormat;

UInt32 propSize = sizeof(fileFormat);

//read the file data format , it represents the file's actual data format.

CheckError(ExtAudioFileGetProperty(fp, kExtAudioFileProperty_FileDataFormat,

&propSize, &fileFormat),

"read audio data format from file");

double rateRatio = kGraphSampleRate/fileFormat.mSampleRate;

UInt32 channel = 1;

if (fileFormat.mChannelsPerFrame == 2) {

channel = 2;

}

AVAudioFormat *clientFormat = [[AVAudioFormat alloc] initWithCommonFormat:AVAudioPCMFormatFloat32

sampleRate:kGraphSampleRate

channels:channel

interleaved:NO];

propSize = sizeof(AudioStreamBasicDescription);

//设置从文件中读出的音频格式

CheckError(ExtAudioFileSetProperty(fp, kExtAudioFileProperty_ClientDataFormat,

propSize, clientFormat.streamDescription),

"cant set the file output format");

//get the file's length in sample frames

UInt64 numFrames = 0;

propSize = sizeof(numFrames);

CheckError(ExtAudioFileGetProperty(fp, kExtAudioFileProperty_FileLengthFrames,

&propSize, &numFrames),

"cant get the fileLengthFrames");

numFrames = numFrames * rateRatio;

_mSoundBufferP[i].numFrames = (UInt32)numFrames;

_mSoundBufferP[i].channelCount = channel;

_mSoundBufferP[i].asbd = *(clientFormat.streamDescription);

_mSoundBufferP[i].leftData = (Float32 *)calloc(numFrames, sizeof(Float32));

if (channel == 2) {

_mSoundBufferP[i].rightData = (Float32 *)calloc(numFrames, sizeof(Float32));

}

_mSoundBufferP[i].sampleNum = 0;

//如果是立体声,还要多为AudioBuffer申请一个空间存放右声道数据

AudioBufferList *bufList = (AudioBufferList *)malloc(sizeof(AudioBufferList) + sizeof(AudioBuffer)*(channel-1));

AudioBuffer emptyBuffer = {0};

for (int arrayIndex = 0; arrayIndex < channel; arrayIndex++) {

bufList->mBuffers[arrayIndex] = emptyBuffer;

}

bufList->mNumberBuffers = channel;

bufList->mBuffers[0].mNumberChannels = 1;

bufList->mBuffers[0].mData = _mSoundBufferP[i].leftData;

bufList->mBuffers[0].mDataByteSize = (UInt32)numFrames*sizeof(Float32);

if (2 == channel) {

bufList->mBuffers[1].mNumberChannels = 1;

bufList->mBuffers[1].mDataByteSize = (UInt32)numFrames*sizeof(Float32);

bufList->mBuffers[1].mData = _mSoundBufferP[i].rightData;

}

UInt32 numberOfPacketsToRead = (UInt32) numFrames;

CheckError(ExtAudioFileRead(fp, &numberOfPacketsToRead,

bufList),

"cant read the audio file");

free(bufList);

ExtAudioFileDispose(fp);

}

这段代码就是把音频文件以设置的kExtAudioFileProperty_ClientDataFormat音频格式,读出到_mSoundBufferP数组中

如果您想使用自己准备的音频文件,ExtAudioFileRead读取时返回-50的code,一般是设置读出的目的音频格式(kExtAudioFileProperty_ClientDataFormat)不正确,如源文件是单声道,而想读出的目的格式是立体声

混音设置

CheckError(NewAUGraph(&_mGraph), "cant new a graph");

AUNode mixerNode;

AUNode outputNode;

AudioComponentDescription mixerACD;

mixerACD.componentType = kAudioUnitType_Mixer;

mixerACD.componentSubType = kAudioUnitSubType_MultiChannelMixer;

mixerACD.componentManufacturer = kAudioUnitManufacturer_Apple;

mixerACD.componentFlags = 0;

mixerACD.componentFlagsMask = 0;

AudioComponentDescription outputACD;

outputACD.componentType = kAudioUnitType_Output;

outputACD.componentSubType = kAudioUnitSubType_RemoteIO;

outputACD.componentManufacturer = kAudioUnitManufacturer_Apple;

outputACD.componentFlags = 0;

outputACD.componentFlagsMask = 0;

CheckError(AUGraphAddNode(_mGraph, &mixerACD,

&mixerNode),

"cant add node");

CheckError(AUGraphAddNode(_mGraph, &outputACD,

&outputNode),

"cant add node");

CheckError(AUGraphConnectNodeInput(_mGraph, mixerNode, 0, outputNode, 0),

"connect mixer Node to output node error");

CheckError(AUGraphOpen(_mGraph), "cant open the graph");

CheckError(AUGraphNodeInfo(_mGraph, mixerNode,

NULL, &_mMixer),

"generate mixer unit error");

CheckError(AUGraphNodeInfo(_mGraph, outputNode, NULL, &_mOutput),

"generate remote I/O unit error");

UInt32 numberOfMixBus = (UInt32)self.sourceArr.count;

//配置混音的路数,有多少个音频文件要混音

CheckError(AudioUnitSetProperty(_mMixer, kAudioUnitProperty_ElementCount, kAudioUnitScope_Input, 0,

&numberOfMixBus, sizeof(numberOfMixBus)),

"set mix elements error");

// Increase the maximum frames per slice allows the mixer unit to accommodate the

// larger slice size used when the screen is locked.

UInt32 maximumFramesPerSlice = 4096;

CheckError( AudioUnitSetProperty (_mMixer,

kAudioUnitProperty_MaximumFramesPerSlice,

kAudioUnitScope_Global,

0,

&maximumFramesPerSlice,

sizeof (maximumFramesPerSlice)

), "cant set kAudioUnitProperty_MaximumFramesPerSlice");

for (int i = 0; i < numberOfMixBus; i++) {

// setup render callback struct

AURenderCallbackStruct rcbs;

rcbs.inputProc = &renderInput;

rcbs.inputProcRefCon = _mSoundBufferP;

CheckError(AUGraphSetNodeInputCallback(_mGraph, mixerNode, i, &rcbs),

"set mixerNode callback error");

AVAudioFormat *clientFormat = [[AVAudioFormat alloc] initWithCommonFormat:AVAudioPCMFormatFloat32

sampleRate:kGraphSampleRate

channels:_mSoundBufferP[i].channelCount

interleaved:NO];

CheckError(AudioUnitSetProperty(_mMixer, kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input, i,

clientFormat.streamDescription, sizeof(AudioStreamBasicDescription)),

"cant set the input scope format on bus[i]");

}

double sample = kGraphSampleRate;

CheckError(AudioUnitSetProperty(_mMixer, kAudioUnitProperty_SampleRate,

kAudioUnitScope_Output, 0,&sample , sizeof(sample)),

"cant the mixer unit output sample");

//未设置mixer unit 的kAudioUnitScope_Output的0的音频格式(AudioComponentDescription) 未设置io unit kAudioUnitScope_Output 的element 1的输出AudioComponentDescription

//CheckError(AudioUnitSetProperty(_mMixer, kAudioUnitProperty_StreamFormat,

//kAudioUnitScope_Output, 0, xxxx, sizeof(AudioStreamBasicDescription)), "xxx");

CheckError(AUGraphInitialize(_mGraph), "cant initial graph");

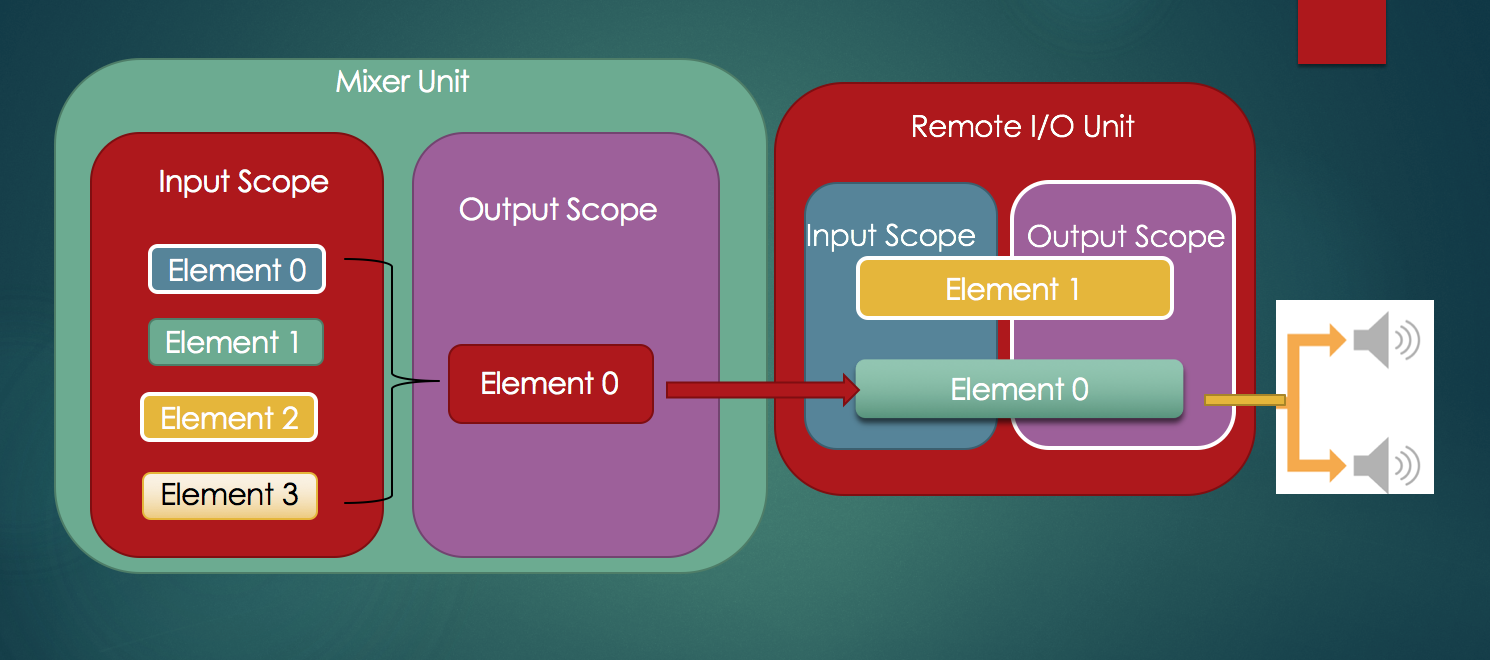

新建AUGraph->新建AUNode(混音Node,音频输出Node)->将混音Node和音频输出Node连接(连接后,混音后的输出直流入音频输出的Audio Unit)->从AUNode中得到相应的Audio Unit->设置Mixer Audio Unit的混音路数->设置各路混音的回调函数,输入的音频格式->设置混音个输出采样率->Initialize AUGraph

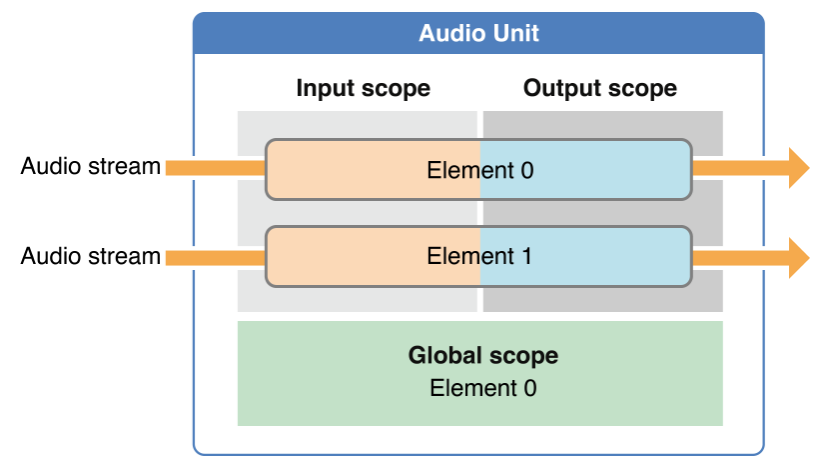

这张图片是一个Audio Unit; 相对于混音的Unit(type是

kAudioUnitType_Mixer,subType是kAudioUnitSubType_MultiChannelMixer),我个人理解是这样的

左边是

Mixer Unit,右边是Remote I/O Unit,在Mixer Unit的Input Scope下,有多少个Element(Bus),由kAudioUnitProperty_ElementCount来设置,并分别为Mixer Unit的Input Scope下的各个Element(Bus)设置音频格式和输入回调;将音频源合成到Mixer Unit的Output Scope的Element 0上。

混音输入回调

static OSStatus renderInput(void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber, UInt32 inNumberFrames,

AudioBufferList *ioData)

{

SoundBufferPtr sndbuf = (SoundBufferPtr)inRefCon;

UInt32 sample = sndbuf[inBusNumber].sampleNum; // frame number to start from

UInt32 bufSamples = sndbuf[inBusNumber].numFrames; // total number of frames in the sound buffer

Float32 *leftData = sndbuf[inBusNumber].leftData; // audio data buffer

Float32 *rightData = nullptr;

Float32 *outL = (Float32 *)ioData->mBuffers[0].mData; // output audio buffer for L channel

Float32 *outR = nullptr;

if (sndbuf[inBusNumber].channelCount == 2) {

outR = (Float32 *)ioData->mBuffers[1].mData; //out audio buffer for R channel;

rightData = sndbuf[inBusNumber].rightData;

}

for (UInt32 i = 0; i < inNumberFrames; ++i) {

outL[i] = leftData[sample];

if (sndbuf[inBusNumber].channelCount == 2) {

outR[i] = rightData[sample];

}

sample++;

if (sample > bufSamples) {

// start over from the beginning of the data, our audio simply loops

printf("looping data for bus %d after %ld source frames rendered\n", (unsigned int)inBusNumber, (long)sample-1);

sample = 0;

}

}

sndbuf[inBusNumber].sampleNum = sample; // keep track of where we are in the source data buffer

return noErr;

}

将内存中保存的各路音频数据赋值给回调函数的ioData->mBuffer[x].mData,x=0或1

启动或停止AUGraph

初始化完成后,使用AUGraphStart(_mGraph)启动混音,手机就会输出混合后的音频了;使用AUGraphStop(_mGraph)停止输出。

音量和各路音频使能控制

可以单独控制各路音频的音量(对Mixer Unit的Input Scope下的各路Element的kMultiChannelMixerParam_Volume设置音量),也可以控制整体的音量(对Mixer Unit的Output Scope下的Element 0的kMultiChannelMixerParam_Volume设置音量);

对Mixer Unit的Input Scope下的各路Element的kMultiChannelMixerParam_Enable设置使能此路音频信号是否加入到混音中)